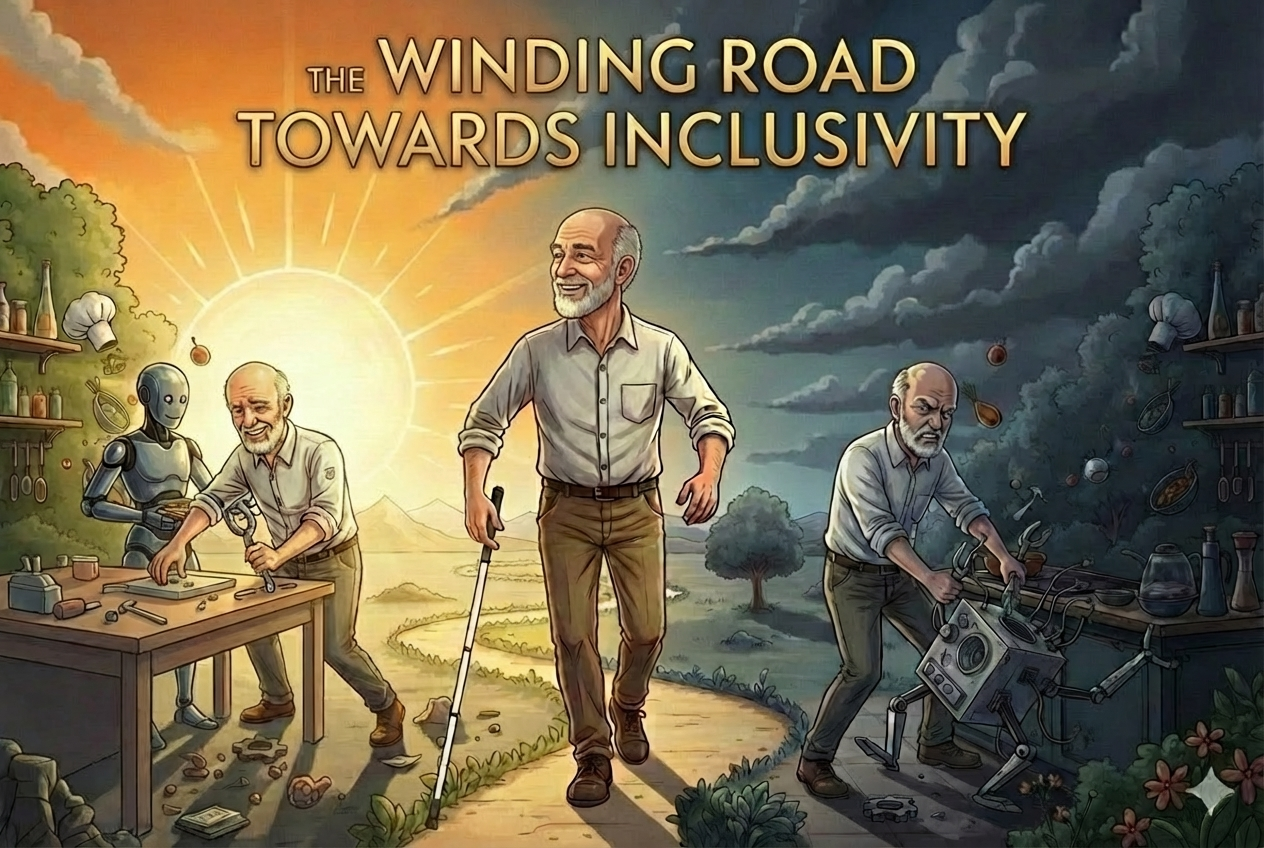

Looking back at 2025: A Blind Perspective on AI Progress

2025 saw big gains in LLMs, with smaller models now very capable. But human-level embodied AI is still years away and AGI likely a decade off. Assistive AI hasn’t progressed much yet, but I’m optimistic and excited to announce an Assistive AI hackathon at Entrepreneurs First on Jan 17.

I say “see you soon” all the time.

I say it automatically, without thinking. Which is odd, because I’m blind—and have been for a very long time.

Sighted language is so deeply baked into everyday speech that I use it without noticing. “Looking back”, “Looking good”, “I see what you mean”, “My vision for this blog”. Even the word perspective comes from Middle English, meaning “seeing clearly.” As a blind man, I can’t look anywhere. Yet my language insists that I do.

That small contradiction sits at the heart of this blog.

The Open Eyed Man focuses on life as a blind person in a relentlessly visual world, and on the hope that AI might finally help close that gap. Since launching the blog on June 3rd and writing 28 weekly posts, I’ve become even more aware of just how dominant sight is among our senses, not only in technology but in how we think, speak, and design.

Back in June, it was impossible not to be swept up by the pace of AI progress. Large language models were becoming startlingly capable. Conversational voice was edging toward something genuinely lifelike. Personal AI agents were starting to feel plausible rather than promotional. At the same time, I was acutely aware of a harder truth: the physical world is visual by default, and assistive technology for blind people remains frustratingly brittle.

So, looking back over the year, where has AI exceeded my expectations, and where has it fallen short?

In summary:

- Mainstream AI technology has made spectacular progress.

- Accessibility for blind users has barely moved.

- The social impact of AI, particularly on work, is becoming harder to ignore.

- AI is now firmly in the mainstream consciousness, no longer introduced with the phrase “so-called artificial intelligence.”

- On one side, venture capital continues to pour huge sums into foundational AI labs like OpenAI and Anthropic. On the other, scepticism is growing—warnings proliferate of an AI bubble about to burst, taking the global economy with it.

I’m not disheartened that accessibility hasn’t yet improved. Much of the underlying technology is still maturing. AI shopping agents aren’t widely used. Jony Ive’s much-hyped personal AI device remains firmly in stealth. And humanoid robots are still in their infancy. Because transformative assistive tech will build on these foundations, it’s unsurprising that accessibility still feels stuck in the dark ages.

Looking forward, predictions about Artificial General Intelligence aren’t converging; they’re diverging. Elon Musk predicted AGI by the end of 2025. That didn’t happen, yet he’s now suggesting it could arrive as soon as 2026. In contrast, Andrej Karpathy, one of the most respected AI researchers, thinks it’s at least a decade away.

Some of this gap comes down to vested interests. But two deeper issues matter more. First, there’s broad agreement that simply scaling models with more data and compute won’t be enough; real architectural innovation is still needed. Second, we don’t even actually agree on what AGI is, or how we would unambiguously measure it.

Definitions of AGI that exclude embodied tasks—like unloading a dishwasher—feel increasingly nonsensical. Those exclusions once made sense, when building versatile robots was a hard physical problem. Today, robot construction is largely solved. If intelligence in animals and humans includes navigating the physical world to survive and thrive, then AGI should include that too.

Looking ahead to 2026, I expect the impact of AI on junior white-collar jobs to become clearer, with significant social and political consequences. I also worry about the likelihood of major cyberattacks turbocharged by AI. But despite these concerns, I remain very bullish, particularly about what’s coming next for assistive technology.

Here are four areas that genuinely excite me.

First, smart glasses with open interfaces. Meta has released a public Device Access Toolkit, and other products with richer interfaces, such as XY Glasses, are already available. Warby Parker has announced a collaboration with Google to enter the smart-glasses market in 2026. If Google integrates Gemini Live, its most powerful video-understanding model, into wearable hardware, that could be a real step change for blind users. The difference between delayed, clunky descriptions and fast, conversational awareness is the difference between confidence and hesitation.

Second, computer-use agents. These systems operate visual interfaces—browsers and apps—by simulating mouse clicks and keystrokes, just as a human would. For blind users, this matters enormously. Many websites are technically “accessible” yet practically unusable. A personal AI agent that can see and operate these interfaces on our behalf could bypass entire layers of frustration.

Third, embodied AI—robots. Fully autonomous humanoid robots are still several years away, but capable teleoperated robots should arrive in 2026. The groundwork being laid for robots to navigate the physical world is directly relevant to blind navigation. I’ve pre-ordered the Glide robotic “guide dog,” expected in 2026, and I’m genuinely curious to see how it performs in the messy reality of pavements, crossings, and human unpredictability.

Finally, AI models are getting smaller, cheaper, and faster. Models that can run locally on high-end laptops, and eventually phones, will proliferate. Apple is betting heavily on on-device AI rather than the cloud. For accessibility, this is crucial. Many assistive tasks demand instant feedback. Silence, lag, or a spinning network icon can be more disabling than no AI at all.

Thank you for indulging my blathering and occasional ranting during 2025. The Open Eyed Man is taking a short break next week, but normal service resumes on January 6th. There’s a lot to look forward to. On January 17th, I’m hosting an assistive-technology hackathon in Shoreditch with Raspberry Pi and Entrepreneurs First. Around 50 extremely smart AI engineers will spend the day prototyping ideas using cameras, AI accelerators, low-cost computers, expensive smartphones, smart glasses, string, sealing wax, and whatever else gets the job done. I can’t wait.

If anyone wants to join in the hackathon, absolutely do let me know. In the meantime, I wish everyone a most enjoyable festive break.