On Job Board Games of Chance and Skill

Here’s a deeper dive on a traffic light framework for assessing AI’s impact on jobs. Spotting roles safe from AI over 10–15 years is hard, and over a lifetime even harder. But this framework makes it less of a lottery.

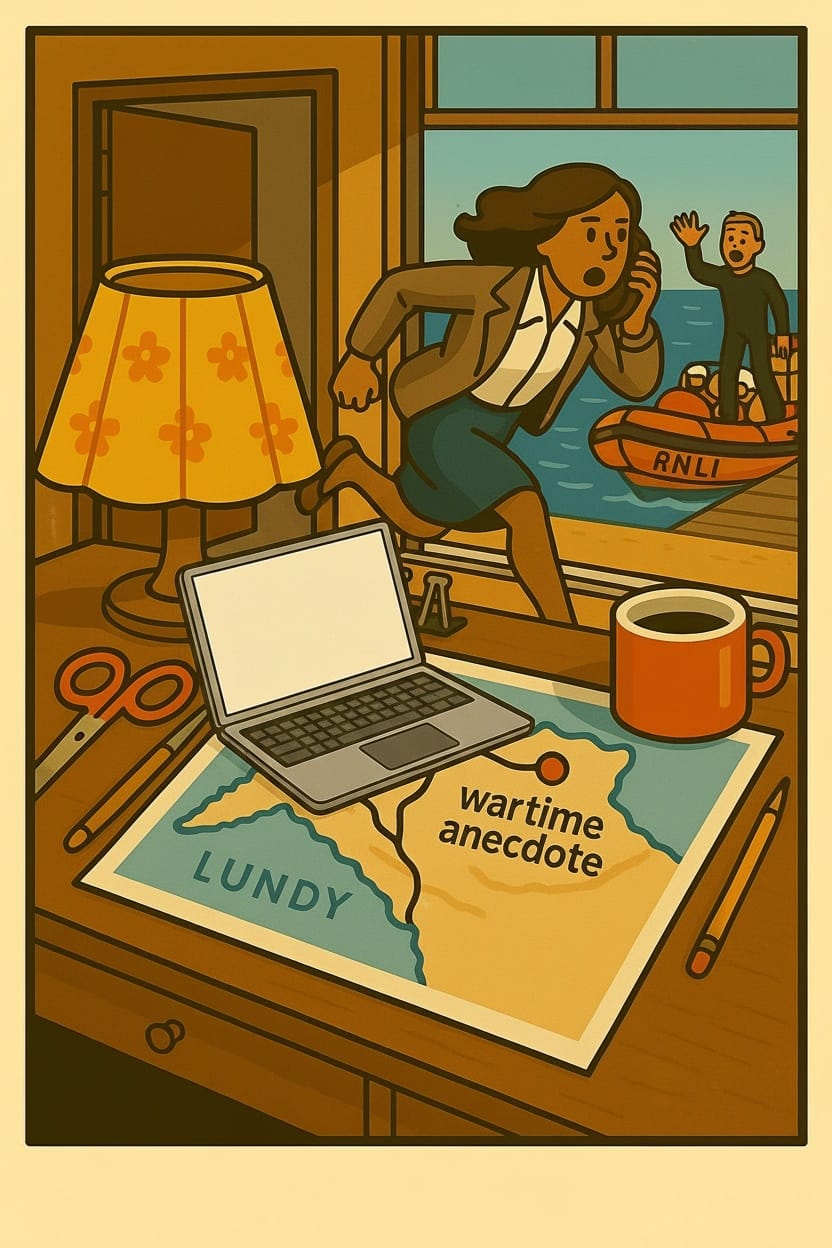

You really do need to read the final paragraph below to understand the significance of the cover image to this post.

In my post entitled Traffic Lights and Traffic Cops I set out the following acronymic expansion of red, amber and green as a framework for considering which jobs may be more or less exposed to AI displacement (i.e. where the number of humans doing the specific jobs will decrease more or less rapidly, with red seeing the most precipitous erosion.

RED (high risk): Routine, Explicit, Digital

AMBER (medium risk): Augmentable, Mechanisable, bounded, Encodable, Removable

GREEN (Low Risk): Gregarious, Regulated, Embodied, Educative, Nebulous

The cover image for that post evokes a universally loved board game. That game’s mix of chance and skill chimes very nicely with the challenges of choosing a job today that will still exist in 40 years’ time.

AI resistant job selection is not an entire lottery, and this post further explains the RED, AMBER and GREEN categorisation as a lens for making less risky choices. Having said that, there remains an element of unpredictability/chance. Some jobs, like programmer and lawyer, looked very safe 5 years ago but certainly will not look safe 5 years hence. So it feels as though the longevity of any job choice is always at the mercy of differential technological progress contextualised by regulatory/societal evolution.

There is definitely a game to be built with seven specific jobs on each side of the board respectively coloured red, amber, green and white (the white side is for jobs like AI Ethicist, Robopsychologist and Synthetic Data Curator which barely yet exist. There would of course also be Chance and Community Chest cards with edicts such as:

- ChatGPT-7 achieves AGI – forfeit all your RED jobs and go directly to Retraining Jail

- AI Displacement Windfall announced – collect £10,000 from the bank

- Humanoid robots construct house overnight – immediately surrender all your trade apprenticeship certificates

You probably get the gist, but if you don’t get the gist then why not buy yourself a Monopoly set for 35 hours of competitive weekly fun in your newly AI displaced working week.

Here’s a deeper dive into the 3 RED, AMBER and GREEN job categories.

RED

The RED (high risk) category is relatively straightforward to sniff test. Does the job entail doing a defined set of tasks again, again and again? Is there an extremely explicit set of instructions to train new recruits? Is it desk bound using a computer or a phone? If the answer to all three questions is Yes, then beware – your card is marked.

The job does not have to be simple from a human perspective. For example, programming non-trivial algorithms definitely exercises my mental capacity and hunting down bugs is intensely time consuming and far from straightforward. Some jobs in this category also require several years of full time training to become proficient – think doctors, lawyers and financial analysts.

But it is worth remembering that in the days before spreadsheets or pocket calculators, some jobs which now seem mundane were highly demanding.

Moravec's paradox has become widely recognised in the loose form “Everything that humans find hard, computers find easy; and conversely, everything that humans find easy, computers find hard”. This is a gross over simplification and is in any case increasingly untrue. AI can now do stuff in seconds that takes highly trained humans many, many hours. AI cannot just do hard sums, but can diagnose medical conditions, undertake very deep legal review of complex contracts or case law, and many other tasks that rely upon lots and lots of knowledge.

So anything we would consider ‘knowledge work’ but without any physical elements is likely to be red. There are of course other jobs in the red category that do not require much knowledge at all. For example, if you know how to use cut and paste and are willing to sit in front of a screen all day, there are currently hundreds of thousands of jobs simply moving data between unconnected online systems. These jobs are arguably at the highest risk of all and will not last.

Until recently any job that involved voice conversations seemed quite safe, because realistic AI powered voice was not possible., However, although you may not enjoy the actual voice which narrates this blog, it is an uncannily realistic clone of my own voice and is also capable of holding intelligent conversations. So the red category now extends to anyone working in a call centre following a well defined script or set of guidelines for how to deal with anything from a table reservation request to a full blooded complaint or to the telephonic screening of drug trial candidates.

AMBER

As befits it’s role as the middle category this is the most nuanced and certainly debatable regarding timelines. The characteristics listed here are ones that make a job more rather than less prone to AI displacement. The more of these characteristics that apply to a role the more economically susceptible it is to AI/Robotic fulfilment .

Augmentable jobs are ones where humans are already being made more efficient by AI tools. For example radiology protocols often require that a scan is performed by primary and secondary radiologists independently and if there is a dispute then a third radiologist arbitrates. This is effective at catching human error but expensive. We are already seeing the secondary radiologist being replaced in some scenarios by AI. This is a form of human augmentation which is useful because there is an acute shortage of radiologists. But the direction of travel seems clear. The AI already has superhuman ability to spot tumours, so why wouldn’t the primary reviewer become the AI? And when the number of errors caught by the secondary (human) reviewer becomes negligible, regulations will change and we will trust the AI, with a radical drop in the number of human radiologists we need.

So, augmented today often means displaced tomorrow.

Mechanisable is less subtle. This is just a matter of time in many jobs. Where the humanoid form is particularly important, we may still be 4-6 years away from reliable enough robots, although the economics look extremely favourable to robots when 24x7 operation is worthwhile.

Bounded refers to how well the full breadth of the job can be written down or observed. For jobs requiring interaction with the physical world, a human master/robot apprentice model will probably be an important way of collecting training data. A human apprentice eventually becomes a master of their trade and takes over from their teacher. Likewise, so will robot apprentices take over from their masters. But thereafter the skills will be transferrable from one robot to all its successors through an overnight data dump. In other words, a 3year apprenticeship for a human today might become a 3 year apprenticeship for a robot in 2027, which then becomes a 3 hour apprenticeship for a thousand robots in 2030.

The Encodable AMBER characteristic has similarities to the Explicit RED characteristic. In the RED category, the Explicit characteristic means all the rules and procedures for the job can be found in documentation today. In contrast, AMBER Encodable jobs are less likely to be exhaustively documented today, relying often on word of mouth and ‘on the job’ training. But there are many of these Encodable AMBER jobs, particularly where the scope is well bounded, that have little or no need for extensive master/apprentice learning by observing once they have been fully documented (encoded).

The final AMBER characteristic, Removable, is hard to anticipate, because the job ceases to exist due to changes in other jobs or behaviours. A great historical example is ostlers (or hostlers if you’re American). Hands up everyone who has heard of hostlers/ostlers, keep your hand up if you’ve ever met one and still keep your hand up if he was actually tending to your horses as you dined at the staging in. It’s not that the tending of horses became automated, but simply that the horses themselves became automated and their replacements didn’t need staging let alone mucking out. I wonder how many ostlers retrained as petrol pump attendants.

The example may seem arcane, but it does illustrate how challenging it is today to predict jobs that will disappear as a secondary effect. A few years ago Benedict Evans speculated a causal chain where we need less A&E doctors because self-driving vehicles are 1,000 times less accident prone than human drivers. More autonomous vehicles equals less accidents equals less serious injuries equals less need for serious injury fixer-uppers. Of course self-driving cars have taken longer to emerge than the optimists expected, but with Waymo leading the way, they are already on the public roads and are demonstrating amazing safety records. So maybe Benedict’s prediction is just a question of when not if.

GREEN

The characteristics in the RED and AMBER categories are ones that heighten the risk of AI displacement. In this final, safer GREEN category, the characteristics are ones that militate *against* AI displacement. The characteristics are quite diverse and it would be unusual for a single job to exhibit all 5 of the GREEN characteristics, but any one of them makes the job relatively AI immune.

Gregarious jobs are ones that you cannot perform in isolation. Engaging with many other people at a deep and not transactional level is a good defensive trait. I’m not talking about online influencers, but thinking more about publicans, front of house managers in hotels/restaurants, football team managers, platoon commanders and politicians.

Politicians also probably exhibit the Regulated characteristic. Regulated jobs must be performed by humans for regulatory/legal reasons, such as judges, business directors and AI Ethicists. Having said that, we are not very far from the world’s first unicorn with only one human employee – the legal custodian of a business operated entirely by AI agents.

Embodied jobs are ones that require a combination of knowledge and a physical human body. The need for a body might be because the job is so physically complex, specialised or varied that it will take many years for robots to come up to speed. Or it may be because the value of the job derives explicitly from it’s human performance. For example, I doubt anyone will be interested in watching robots beating ten bells out of each other in a boxing ring, or watching robots sinking more “hole in one”s at the British Open than Kim Jong-Il. More generally there does seem unsurprisingly to be something uniquely human about feats of human endeavour.

Educative also makes it into my GREEN category intuitively because there feels to be a gargantuan task ahead of us and our children to understand what it is to be human in an AI dominated world. Teaching will be very different and whether students use AI to write their essays will be a ridiculous non-debate. Of course students will use AI maximally, and often much better than their teachers. But there will be lots to learn about living safely in an AI world, about thinking critically (even when you have a robot with a brain the size of a planet at your side), and about navigating an ever changing, uncertain job market.

And so finally to Nebulous… In an undoubtedly uncertain job market, your best bet might just be to have an uncertain job. That’s to say, if no-one can really define, or even describe what you do, then it’s hard to see how it can be automated away. But do watch out because you’re not immune from being entirely bypassed as everything around you changes.

With all this uncertainty, having two or more very different jobs could be a great strategy. A product manager in the education sector who volunteers in a lifeboat crew, makes/sells very beautiful lampshades and also leads guided tours of Lundy is well protected – I do actually know that woman.