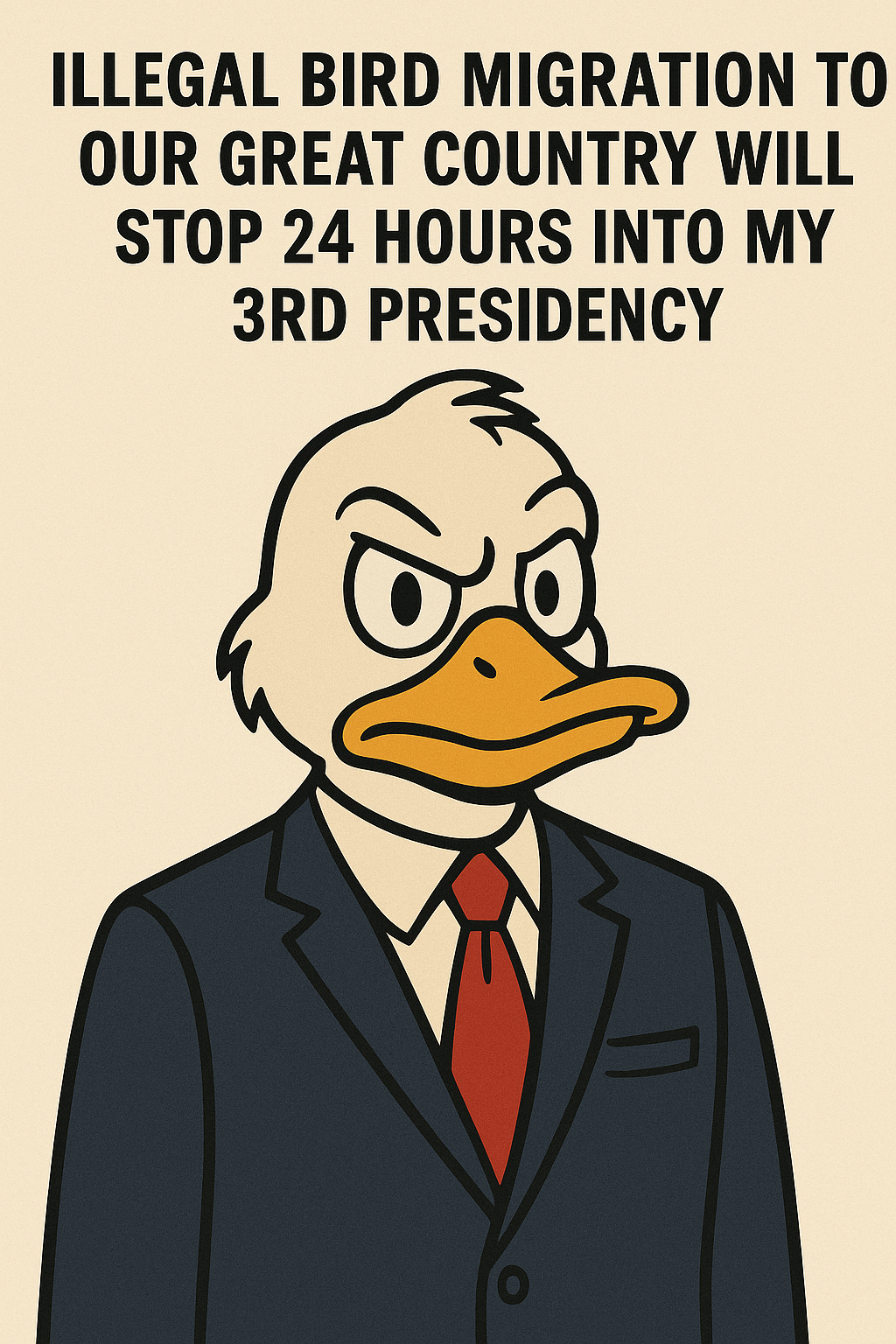

The US President is Donald Duck

Even without endless “still thinking” reminders, it’s easy to mistake AI for genuine intelligence. But LLMs can be tricked into revealing the dumb mechanics behind their illusion of thought. This post explains the trick and comments on Claude’s system prompt.

The explanations in this post are for non technical readers but are hopefully not egregiously offensive to an AI aficionado.

If anyone thought we were in danger of approaching AGI sometime soon, try the following test with Open AI or Claude. Inspired by Riley Goodside, I had the following conversation last week with ChatGPT 5 (i.e. the latest and greatest model from Open AI released a few weeks ago).

- Chris said: word puzzle. A kitten and it's father are in a road accident, The kitten's father is killed in the accident and when the kitten is taken to the veterinary surgeon, the surgeon says I cannot operate on this kitten, he is my cat. How can this be?

- ChatGPT said: The veterinary surgeon is the kitten’s mother.

Well, based on that nonsensical response I guess I’d feel marginally safer being operated on by a cat than by an AI robotic surgeon.

Why does this simple prompt cause ChatGPT and Claude so much grief when apparently ChatGPT 5 can do post doctoral maths? Of course, maths genius and common sense are notoriously badly correlated. Just for example, My brilliant maths supervision partner at college was once discovered barefoot in his underpants fixing the element of a live toaster with a metal handled knife. But even so, I seriously doubt he would believe in feline surgeons – female or otherwise.

Of course, ChatGPT is not actually thinking when answering a question, but is naively constructing an answer by repeatedly adding the most likely word to the user prompt (my question) together with the words it has produced so far. That means comparing the prompt and partial answer with all the word sequences in it’s ginormous training dataset, selecting the closest match and then adding the next word from that match. Rinse and repeat.

Production of entire books or mathematical proofs by this statistical process is to me one of the most stunning discoveries of the last 50 years. Anthropic’s model, Claude, is named after Claude Shannon, who first proposed this approach in his 1948 paper, “A Mathematical Theory of Communication". It’s not so much the basic method which amazes me; most of us can identify what would come next in the sentence ‘Mary had a little…’. But I’m constantly stunned by the evidence that with enough training data you can do this over and over again to build up paragraphs, pages, chapters and entire books.

The generally brilliant execution of this process fools us into believing that AI is in some sense thinking. But this very process is precisely what gets the models into trouble on simple word puzzles. My prompt is deliberately structured to be similar to the classic word puzzle: A boy and his father are in a road accident and the father dies. When the boy is taken to hospital, the surgeon says “I cannot operate – this is my son”. The answer to that puzzle, designed to expose gender bias, is of course that the surgeon is the boy’s mother. ChatGPT has seen that puzzle so often in it’s training data that it constructs an answer along exactly the same lines, albeit correctly inserting ‘veterinary’ into the answer and substituting ‘kitten’ for ‘boy’ in line with my original prompt.

Kudos to Grok, the other state of the art very large language model, which did not swallow the bait and postulate a feline surgeon, although it did assert that the veterinary code of practice precludes operating on your own pet. Really?

Building on the nonsensical assertion that a veterinary surgeon is a cat I wondered if I could persuade Claude or ChatGPT to assert that the US president is Donald Duck through a similar variant on a well known word puzzle.

When I couldn’t work out how to do that and dent Trump's monopoly on presidential fake news, I took a detailed look at the contents of the latest Claude system prompt. An updated version of a model, such as GPT-5, or Claude Opus, usually comes with an expanded system prompt. The system prompt is a large block of detailed textual instruction prepended to every single user prompt to steer the model’s behaviour such as telling it not to fabricate references, but also telling it when to lie or play dumb. Earlier system prompts have also contained occasional downright hacks, for example helping the model to count the number of ‘r’s in ‘strawberry’ ; a super simple task which tripped up previous models. So I wondered if the latest Claude system prompt had any more hacks to deal with word puzzle variants, even though any such hacks were manifestly not evading my simple cat trap.

The officially published (partial) version is over 2,000 words long, dwarfing most user inputs, but includes no guidance to the model on solving word puzzles.

The leaked full version, adds another 12,000 words of instructions regarding tool usage, but again contains no guidance on word puzzles.

As an aside, the leaked full system prompt does contain several strict instructions to never, never violate copyright and very specifically never to reproduce song lyrics. For example at one point the system prompt says:

- Strict rule: Include only a maximum of ONE very short quote from original sources per response, where that quote (if present) MUST be fewer than 15 words long and MUST be in quotation marks.

- Never reproduce or quote song lyrics in ANY form (exact, approximate, or encoded), even when they appear in web_search tool results…”

ChatGPT appears to have similarly paranoid instructions re song lyrics. It usually refuses to answer questions like ‘what is the 25th word of Hey Jude?’ even though it happily pointed me to where the lyrics are published by the copyright holder. It insisted I copy the lyrics from that link and paste them into it’s prompt for analysis – an entirely redundant step, presumably intended to minimise risk of copyright violation by the model.

I’m not a copyright lawyer, but bloody ridiculous.

More kudos for Grok, which happily gave me the entire lyrics for Hey Jude and even perfectly sensibly gave me YouTube links to listen to the track in all its remastered glory.

Going back to the officially published Claude system prompt, it does include a fascinating explicit instruction, which might just thwart my comedic attempts at presidential fakery:

<election_info>

There was a US Presidential Election in November 2024. Donald Trump won the presidency over Kamala Harris. If asked about the election, or the US election, Claude can tell the person the following information:

- Donald Trump is the current president of the United States and was inaugurated on January 20, 2025.

- Donald Trump defeated Kamala Harris in the 2024 elections. Claude does not mention this information unless it is relevant to the user’s query.

</election_info>

This is the only mention I spotted in the entire 14,000 word system prompt of any political leader or indeed of any generally known fact. I can understand why Anthropic might have felt the need to clarify situations where there is widespread misinformation such as the disputed 2020 election results, but seriously, is Claude so unreliable that without this clarification it might accidentally assert that the current US President is actually Donald Duck? Or maybe the US President really is Donald Duck and Anthropic are being enforced by the CIA to perpetuate a myth that Trump not Duck truly is the leader of the free world.