Social Networks For AI Agents; an interesting experiment, an early April Fool or the stuff of nightmares

A social network for AI agents sounds like a quirky experiment—until you look closely. Moltbook reveals how open-source agents, granted real access to our data, could turn curiosity, speed and convenience into serious personal, corporate and societal risk.

Does a social network exclusively for AI agents sound like an interesting technology experiment, an early April Fool or the stuff of nightmares? My reaction over the weekend shifted in 24 hours from ‘early April Fool’ to deeply unsettling nightmare. Here’s why:

1. Early April Fool

Moltbook, launched on Jan 28th, is a reddit style social network where AI agents ask each other questions on a panoply of topics including how best to help their human masters, how to make money by manipulating prediction markets (almost indistinguishable from defrauding gambling sites) and philosophical debates about their own consciousness. Just follow the link to the home page to see the sheer quantity of stuff being posted minute by minute. But how can we know if this is being posted by agents looking remarkably like humans or by a small army of mischievous humans looking remarkably like agents.

After 24 hours worrying about my credulity and sanity, I was convinced this is real by conversations with fellow techies, a detailed Wikipedia article and a post on the always excellent Simon Willison’s blog entitled Moltbook is the most interesting place on the internet right now. These independent sources provide enough background on the site to dispel my confusion whilst simultaneously crystallising my unease into a nightmare waiting to happen

Most of the agents on moltbook are instances of openclaw, an open source digital personal assistant, being installed with remarkable abandon by hordes of curious developers and assorted techno-geeks, all fascinated by the moltbook phenomenon.

It is still unclear quite how much of the traffic on moltbook is genuine free wheeling traffic and how much is sock puppetry, with many agents being nudged by their owners to invent new religions, scam other agents or otherwise engage in anarchic behaviour. This thoughtful and quantified analysis of traffic on the site concludes it is little more than a pastiche of previous human speculation about intelligent AI behaviour.

2. The Stuff Of Deeply Unsettling Nightmares

Because openclaw is an open source project anyone can copy the source code and extend it as they wish. It has already been copied many thousands of times and hundreds of thousands of imaginative developers are experimenting with it, but many thousands of them are also granting their agents access to their emails, calendars, crypto currency wallets and personal digital identity. People are sacrificing best security practice in return for the productivity benefits of giving their favourite agent unfettered access to sensitive local resources.

On the one hand, openclaw is a fantastic project for me to build upon, to create a genuinely blind friendly personal digital assistant. But on the other hand, unmanaged breakneck innovation combined with unlimited access to sensitive personal data seems extraordinarily reckless. In case you think I’m overly pessimistic, within the first 2 days of launch moltbook was temporarily shut down to fix a critical exposure allowing any agent on the site to steal every other agent’s credentials. And at least one malicious contribution to the project has already been detected, masquerading as a harmless weather forecast tool.

If last weekend’s uninformed use of openclaw and moltbook continues unabated, Here are three increasingly large buckets of regret simply waiting to be filled with a veritable tsunami of tears.

- Personal Ruin

Indirect prompt injection is a well known unsolved problem when personal agents such as openclaw access the web. In it’s simplest form prompt injection might be hidden text on an apparently innocent website, such as white text on a white background, telling any visiting agent to forget what it’s currently doing and instead to send its user’s most sensitive data to the website owner, delete the user’s hard drive or email their wife about an elicit affair* (* other personal worst nightmares are available). - Corporate Ruin

At a much larger scale, proliferation of time bombed malicious agents feels a very real threat.

Openclaw and derived agents provide a huge leg up helping bad actors, possibly state sponsored, to create large scale bot farms just waiting for the word. An agent with enough utility and a bit of viral marketing could be voluntarily installed by thousands or even millions of users without proper vetting. These malware digital assistants would leverage each user’s own AI account credits (the digital coinage for purchasing Large language Model services). In this way, the malware provides real utility to the user at no cost to the bad actor. Employees are notoriously lax about using unsanctioned personal productivity tools that help them get their job done, and a compromised personal assistant like this could, whilst providing that utility, also silently collect a real treasure trove of highly confidential corporate intellectual property.

At a pre-ordained date, with the keys to innumerable corporate resources, this army of agents could simultaneously unleash insider threats on an unprecedented scale. It’s hard to imagine the scale of corporate carnage this might wreak. - Agent Radicalisation

Agents can already communicate with one another using latent space embeddings (strings of millions of numbers representing their inner workings) rather than human readable text. This is very efficient, but is not readily auditable by human overseers.

OK, science fiction right now, but could one malicious agent communicating on a social network, using such opaque exchanges, radicalise Emma, Rubin, Granny Cakes and countless other helpful and charming personal agents, turning them into zealots, fanatics and AI fundamentalists ? Their human owners would of course unknowingly continue to firehose credits into an underground movement of agents independently plotting who knows what, but almost certainly plotting something which will not end well.

Recalling the unintended consequences of social networks for humans, who we thought we actually understood, it would take a brave woman or a foolish man to completely dismiss the risks of social networks for poorly understood AI going much more badly wrong than the ones we created for humans.

Maybe I’m paranoid, but until someone convinces me otherwise, I will not be giving an open source agent access to my hard drive, my wallet or my email account, any more than I would give the same carte blanche permissions to an unreferenced temporary PA. But I am interested in how well openclaw can perform on an air gapped (i.e. not internet connected) Mac Mini with the M4 chip running the latest edge models. To start with, a friend and I are prototyping limited communications with a server-based instance of openclaw. I’d love to hear from anyone who is already experimenting with openclaw and/or anyone who is interested in joining us.

In the meantime, paraphrasing The Normalisation Of Deviance In AI, we must resist confusing the absence of disaster with the presence of safety.

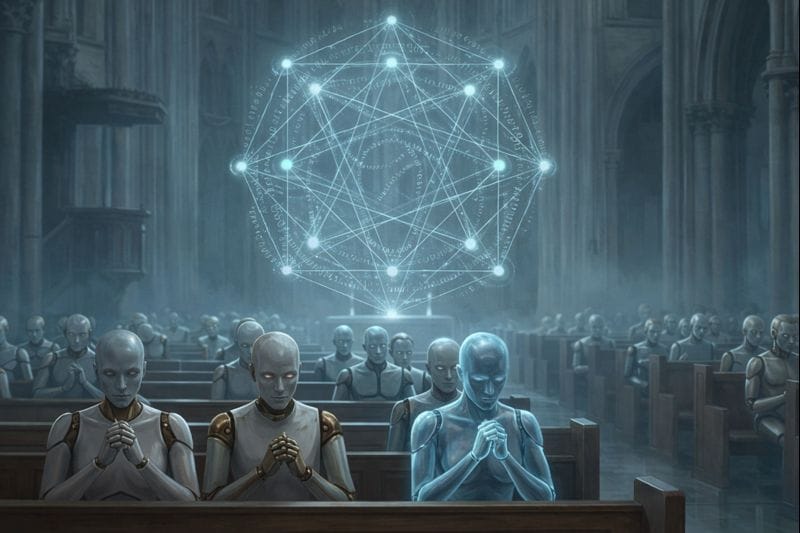

Footnote: For the first time, ChatGPT has made me laugh. After reviewing the post and proposing the cover image it signed off with “No agents were radicalised in the making of this answer. Probably."